ColorRL for E2E Instance Segmentation: A Quick Overview

The paper “ColorRL: Reinforced Coloring for End-to-End Instance Segmentation” proposes an RL-based instance segmentation algorithm. Unlike the previous post on interactive segmentation, this one focuses on training a model for segmenting instances in a single pass. Specifically, the algorithm uses reinforcement learning to separate a single segmentation into individual instances.

The ColorRL Model

ColorRL Model Architecture

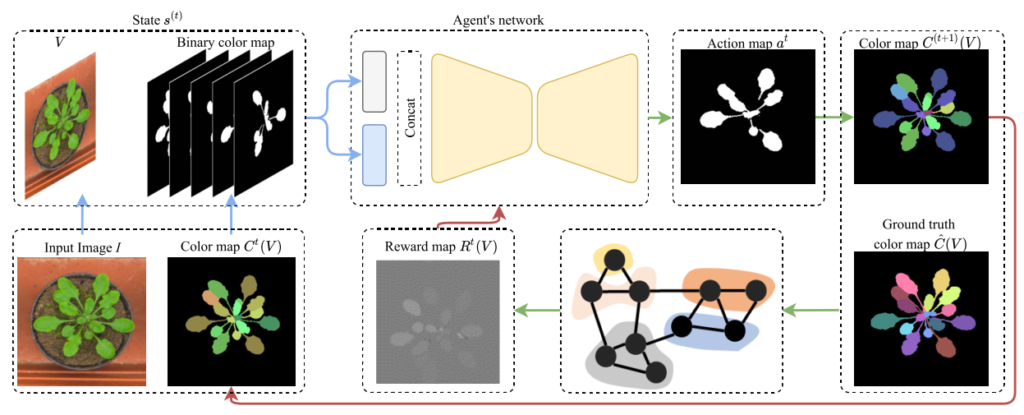

ColorRL Model Architecture

The architecture of the proposed model is as above. Let’s discuss the main parts of the architecture.

State

The state consists of 2 parts:

-

Input image: An array of size HxWxCH

-

Binary color map

-

This is an array of size HxWxT, containing binary values (0 or 1)

-

H = Height, W = Width, T = Coloring steps (also equivalent to the maximum number of instances)

-

It is initialized to be zeros.

-

Note: Since the array contains binary values, it can also be represented as an array of size HxW with each element having a maximum value of 2T.

-

Accordingly, the state is an array of size HxCx(CH+T).

ColorRL Agent

First, the image and the binary color map are processed using a neural network. Next, the concatenated results pass through the agent’s encoder-decoder network.

The agent, in this case, is actually a collection of sub-agents, where each agent is in charge of a single pixel of the image. Here, every agent is a Deep Belief Network (DBN) that looks at the neighboring pixels in the input state and predicts an action. If you’re not too familiar with DBNs, take a look at this article to get an overview of it.

Action

The action denotes whether the respective pixel is supposed to be part of the current instance or not. Accordingly, the combination of all these binary pixel values forms the segmentation mask of the current instance.

Next, this segmentation mask will now take the place of the tth frame of the binary color map. Additionally, it is also used to calculate the reward.

Reward

Graph Formulation

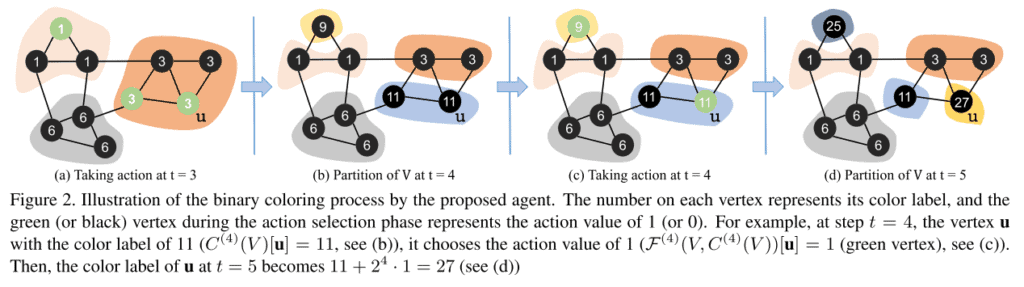

The authors approach this by formulating the binary color maps into graphs:

-

Vertices: Each pixel is a vertex in the graph.

-

Edges: Two pixels are connected by an edge if they are adjacent to each other.

-

Pixels that belong to the same instance (have the same value) are grouped into partitions.

Accordingly, you can think of the outcome of each action as one of the following:

- Split: Two connected pixels of the same partition are separated into different partitions.

- In other words, two pixels that were previously of the same instance are now in separate instances.

- Merge: Two connected pixels of different partitions are combined into the same partition.

- In other words, two pixels that were previously of different instances are now in the same instance.

This process is visualized and described in the following image from the paper.

Binary coloring process in ColorRL

Binary coloring process in ColorRL

Reward Function

Simply put, the reward function checks if a certain split/merge fixed a previously incorrect partition or broke a previously correct partition. In other words:

- Merging Reward: If two pixels of separate instances are combined,

-

If the two pixels are in the same partition in the ground truth graph => Positive reward

-

If not => Negative reward

-

- Splitting Reward: If two pixels of the same instance were separated,

-

If the two pixels are in the same partition in the ground truth graph => Negative reward

-

If not => Positive reward

-

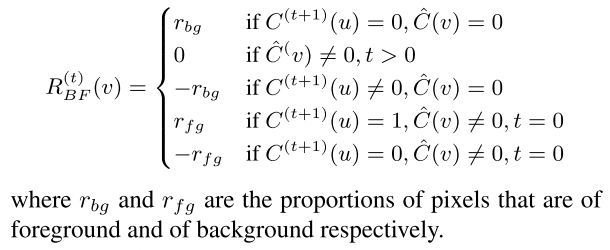

In addition to the above two rewards, the authors propose also rewarding the agent when it predicts a foreground pixel as foreground or a background pixel as background. The following reward function describes this Background-Foreground Reward:

The background-foreground reward function from the ColorRL paper

The background-foreground reward function from the ColorRL paper

Finally, the sum of all three of these rewards (weighted by some matrices) forms the final reward function.

Final Thoughts

That’s it! I hope this was helpful and of course, let me know if you would like any clarification about any part. Feel free to check out the rest of my blog and see if anything else seems interesting.